The principles of value investing, as described by its best practitioners from Benjamin Graham to Warren Buffett, are widely held to be both eternal across time and universal in their application to all kinds of investments. But are they really so? Or, is there something about technology companies that makes them particularly tricky to value? What should we make of the fact that some of the best value investors, most famously Buffett, go out of their way to avoid technology stocks?

I look for businesses in which I think I can predict what they’re going to look like in ten or 15 or 20 years. That means businesses that will look more or less as they do today, except that they’ll be larger and doing more business internationally. So I focus on an absence of change. When I look at the Internet, for example, I try and figure out how an industry or a company can be hurt or changed by it, and then I avoid it … Take Wrigley’s chewing gum. I don’t think the Internet is going to change how people are going to chew gum… I don’t think it’s going to change the fact that Coke will be the drink of preference and will gain in per capita consumption around the world; I don’t think it will change whether people shave or how they shave.

— Warren Buffett

This question is of vital interest to me since:

- I have a technology background (degrees in Engineering and Computer Science; worked in technology companies for many years; co-founded a software company).

- I am convinced, after two decades of experience, that value investing is the right approach to active investing.

But beyond my personal concerns, I will argue in this post that the consequences of technology cannot really be avoided by any investor in this day and age.

Value Investing: a very brief introduction

The term “value investing” probably means different things to different people, but its main principles can be summarized quite briefly. The central idea, articulated most clearly forcefully by Benjamin Graham, is that a stock should be thought of as a share of the underlying business rather than a piece of paper, or these days a set of bits in a brokerage account. It has an intrinsic value, which is the present value of all the future cash generated from that business. Since the future is always uncertain, this intrinsic value cannot be determined with any real precision; the price of a stock fluctuates from day to day as various classes of investors and speculators make informed —or uninformed! — guesses about this unknown intrinsic value. Graham advised investors to ignore this daily distraction and concentrate, instead, on the long term fundamentals of the underlying business, arguing that the stock price ultimately approaches the intrinsic value (“in the short run, the market is a voting machine, but in the long run, it is a weighing machine”). A value investor should patiently wait until the occasionally bipolar “Mr Market,” in one of his depressed moods, offers an opportunity to buy a stock below its intrinsic worth. Graham also suggested leaving a margin of safety between its price and its intrinsic value, in case our assumptions turn out to be too optimistic. And when Mr Market, in one of his manic moods, is overvaluing a stock we hold, Graham advises us to sell it. In the memorable words of his most famous student, Warren Buffett, a value investor has to “be fearful when others are greedy and greedy when others are fearful”!

Buffett has applied these principles to great fame and fortune, further improving them through the help of his talented partner Charlie Munger. He has described his approach in his highly readable annual letters, and in speeches and interviews over his six decades of investing. Among the many seminal ideas contributed by Buffett and Munger is that the future cash profits of a business, the source of its intrinsic value, depend crucially on its ability to withstand profit-eroding competition: its “moat”. Businesses that have a moat against competitors are worth a lot more than the average business, since they can usually compound their earnings over time, thereby multiplying their intrinsic value. Investing in moated companies have led to outlier returns for Buffett and Munger, as they held on to them for decades, letting the magic of compounding work over time. Finding and holding on to such businesses through the rough and tumble of market mayhem is not easy, however, otherwise everyone would have become a billionaire by following them!

Buffett and Munger also emphasize that an investor should stay well within his or her “circle of competence” in order to have the courage of conviction to stick with the investment when the market disagrees, and its price plunges, as often happens from time to time. They are fond of citing:

I’m no genius. I’m smart in spots – but I stay around those spots.

— Thomas J. Watson Sr. legendary CEO of IBM

Finally, Buffett and Munger recognize that the market is generally quite efficient, with lots of smart investors competing to outdo each other for high stakes. They suggest, therefore, that investors concentrate their portfolios around a few companies within their circle of competence that they truly understand better than the average investor.

Broadly speaking, that completes our brief sketch of the central pillars of value investing.

It all sounds very logical and straightforward. What else could there be but this approach? Yet there are many different investing philosophies which have nothing in common with what is described above.

Perhaps the most influential is the academic idea, forcefully argued by Nobel-winning economist Eugene Fama, that the market is so efficient that is price of any stock is always right. A direct consequence of this theory is that the central notion of value investing – that the intrinsic value could vary from its extrinsic market price makes no sense. This school of thought, therefore, recommends buying a piece of every company trading in the market without discriminating based on the underlying business value and in strict proportion to their market value (this is called “index investing”). There are many, equally distinguished, economists who vehemently disagree with Fama’s efficient market hypothesis, among them economists Robert Shiller (shared the Nobel with Fama) and Andrei Shleifer.

The market is also full of all kinds of momentum and technical analysis strategies that do not pay any attention to the underlying business. There are also investors who specialize in art and antiques, as well as in precious metals like gold and silver; these are valued entirely for their scarcity, since they do not generate any cash flow that can form the basis for intrinsic value. Finally, there are the so-called “noise” traders, pure speculators, devoid of any reliable information whatsoever!

Learning from more than twenty years of my own experiments in the markets, I am fully persuaded that value investing is the best approach to active investing (with passive indexing being the wise default for most people who do not have the time or inclination for actively participating in the market). It combines both rational and irrational aspects of human nature in a consistent and practical way. Although simple and elegant in concept, value investing is extremely hard in practice. This mainly due to our own temperament getting in the way — it is highly stressful for social and herd-evolved animals like us to be counter-cyclically fearful when others are greedy and greedy when others are fearful!

Temperament aside, my technology background poses an additional problem when it comes to value investing.

A personal dilemma

As already noted, one of the key pillars of value investing is that one should stay within one’s circle of competence in order to have any hope of beating the near-efficient market.

Knowing the edge of your circle of competence is one of the most difficult things for a human being to do … You have to strike the right balance between competency on the one hand and gumption on the other. Too much competency and no gumption is no good. And if you don’t know your circle of competence, then too much gumption will get you killed.

— Charlie Munger

This rings true to me since the “gumption” needed to buy more of a good stock when it goes down depends upon the degree of conviction one has in the fundamental soundness of the underlying company. And such contrarian conviction comes from deep knowledge that can only be had within one’s circle of competence, almost by definition of the latter.

But this poses a practical dilemma: given my background, the center of my circle of competence seems to be precisely those types of technology companies that are so judiciously avoided by nearly all the great value investors of the past!

In theory, there is no difference between theory and practice. In practice, there is!

— Yogi Berra

Perhaps the key to resolving this dilemma lies in understanding exactly what is it about technology that makes it such an anathema to the great value investors? As Buffett suggests, in the quote cited at the beginning, the rapid pace of technological change means that today’s dominant company will likely become tomorrow’s lunch for the next new start-up in silicon valley. Experienced value investors prefer a durable moat against competition; fast changing technology, however, makes maintaining such a moat for any length of time difficult, if not impossible.

To get a sense of why this might be the case, let us turn next to the technology in the very eye of the raging storm of change — the ubiquitous smartphone.

The great disruptor

Consider just a few of the hardware gadgets that have been replaced by software on our smartphone. Besides being a phone, of course, as well as a texting and emailing device, the smartphone contains software apps that replace what used to be physical calendars, contact books, and scratch pads; apps that replace the compass, map, and the GPS-based navigator; apps that replace the camera, video-camera and calculator; apps that convert the phone into a credit card reade. Various apps now monitor our health and keep track of our activities. Software on the phone has made it our social networking machine, our personal shopper, and our travel manager; searching and browsing software has replaced physical dictionaries, encyclopedias, yellow pages, and newspapers. Software like Google Now is even beginning to intelligently anticipate our needs — it informs us of important upcoming events without our asking it (“leave for the airport now!”), acting like our personal assistant. It seems like the list of hardware and human functions that have been at least partially replaced by software expands daily.

And its not just the smartphone. Software is encroaching into heavy industrial equipment too. General Electric now puts chips in their turbines that track of all rotations cumulatively so that their software can predict upcoming failures and schedule maintenance safely in time. Cars already have dozens of sensors and microprocessors monitoring both the internal and external environment, from engine temperature, oil quality, to the distance from other cars; software apps in cars now control the reversing camera, gas gauge, service alerts, GPS maps, radio, music players, and other devices. Taxis are being scheduled, and their approach monitored, by smartphone apps. There are sensors in the generators at the electrical utility plant, along the transmission lines, and at the meter at home, making the whole grid smarter about distributing electricity. Intel chips are already monitoring the “flow” at “smart urinals” at Heathrow airport, to help schedule maintenance during low-use periods. Software can even “print” a prosthetic bone replacement that is customized to fit our individual elbow or knee — software is entering our very bones!

Venture capitalist Marc Andreessen’s trenchant phrase — “Software is Eating the World” — evokes the reach and power of this pervasive and powerful phenomenon.

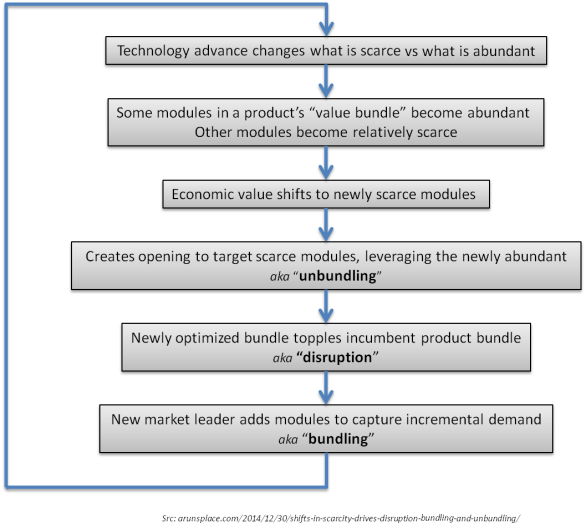

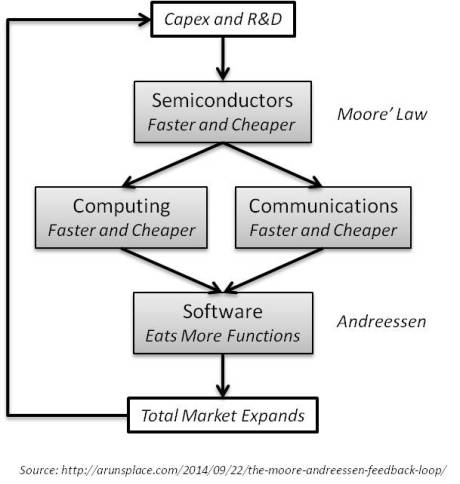

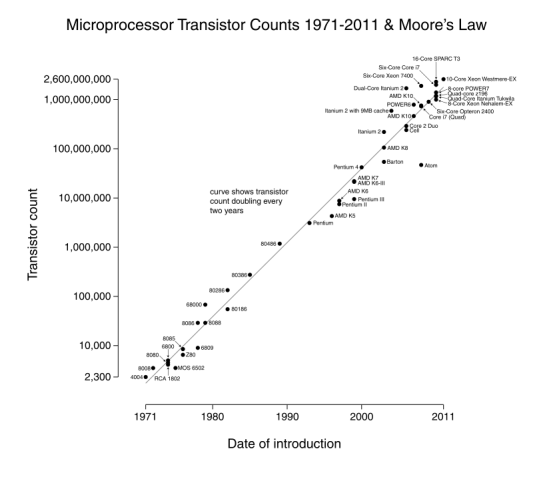

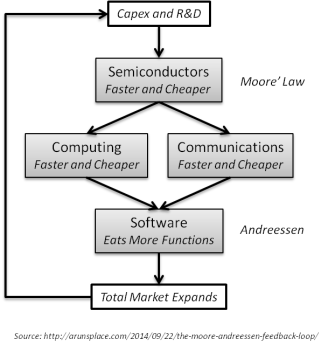

Software by itself, of course, could not have done all this transformation and disruption. In a previous post, I have described the “Moore-Andreesen” positive feedback loop between semiconductors and software that drives this disruptively-creative force. As semiconductors get cheaper and faster every year, both computing and communications become more capable, enabling software to “eat” more and more functions performed by people or hardware today. This extended reach expands the total market for semiconductors, enabling yet more investment in advances that make semiconductors even faster and cheaper — completing the self-reinforcing loop.

There are serious investing implications of this loop. The following is just a small sample of companies that once used to dominate their niche are now are either gone, or fundamentally transformed, by the encroachment of software:

This should be of critical interest to all value investors since companies in the process of being eaten alive can often seem attractive to investors — inexpensive on the basis of the usual valuation ratios — right until their very end. New kinds of “value traps” lie in wait for investors unaware of the disruptive power of software.

Even more surprisingly, some companies that seem to be perpetually unprofitable on the usual earnings basis can sometimes turn out to be quite robustly moated! Consider TCI, the formidable cable monopoly assembled by John Malone, a veritable grandmaster of the information economy. As reported by WSJ’s Mark Robichaux in Cable Cowboy: John Malone and the Rise of the Modern Cable Business, TCI, remarkably, never reported positive earnings on a GAAP basis over its entire three decades of existence and yet was eventually bought by AT&T for 48 billion dollars!

Incidentally, even some of Buffett’s most cherished moats have not escaped this sort of software disruption. One of his favorite investments, Washington Post, eventually lost its advertising moat to Google, and was recently sold to Jeff Bezos, the CEO of Amazon, and another master of the information economy. And long time Buffett holdings like Walmart and Tesco are under attack from Amazon these days and seem to be slowly losing their vaunted moats.

In other words: Buffett clearly ignores software, but will software ignore Buffett!?

Embracing my spots

Reading an earlier draft of this post, my wife, a practicing physician, raised an interesting question at this point — “Surely technological change is not a new phenomenon, and value investors seem to have successfully avoided it so far; what is so different now?”

I think the answer lies in the exponential nature of the semiconductor-software feedback loop. Disruptive change started within the limited circle of technology companies at first, but the relentless arithmetic of exponential growth (for instance, semiconductors are billions of times more powerful and cheaper now than just a few decades ago) means that this circle grows concentrically every year, and is beginning to intersect virtually every aspect of our world. To get a sense of this, just consider the fact that there are now more than a billion smartphones in the world, up from zero just seven years ago. Furthermore, phone companies are projecting that the remaining four billion people carrying ordinary feature phones today will also convert to smartphones soon, as prices continue to plunge exponentially due to the power of the hardware-software loop described above (some Indian smartphones cost less than 35 dollars today). And, as the earlier examples of hardware-eating apps suggest, a smartphone acts like a Trojan horse for proliferating software, spreading it all over the world.

The unavoidable conclusion: avoiding technology will no longer be a viable investing strategy

Compelled by this line of reasoning, and since my center of competence seemed to be within the concentrically expanding circle of technology, I thought it wiser to heed Jacobi’s classic maxim:

Invert, Always Invert!

— Carl Jacobi, 19th century mathematician, on solving difficult problems

Instead of avoiding technology, I decided to invert, and fully embrace the dreaded intersection of value investing and technology as an area of competitive advantage — a personal niche — in the world of investing.

This, however, is much easier said than done, since to the rapid pace of technological change really does make durable moats really hard to establish and maintain and moats are critical to compounding value.

Its not supposed to be easy… Everything that is important in investing is counter-intuitive, and everything that’s obvious is wrong!

— Charlie Munger

I have to confess at this point, though, that the counter-intuitive aspect of investing is exactly what attracts me to it in the first place! I think it somewhat analogous to the “aha” pleasure of scientific discovery. One has to be deeply and thoroughly informed of the existing state of all the relevant knowledge. While necessary, however, such knowledge is not sufficient, in either science or investing. The crucial next step is to have a non-obvious hypothesis about what is currently unknown (in science) or mispriced (in investing) and be proven right over time! Just like no scientist is likely to get any credit for rediscovering something already known, no investor is likely to rewarded for investing in companies that are already well appreciated by the market. The equivalent of that deadly phrase “already published” in science is “already priced into the stock” in the world of investing.

The strange economics of information

Given this conclusion, it seems that serious investors can no longer avoid coming to grips with all the subtle differences between information-based and physical goods that might affect their moat and valuations. I will save for a future post a discussion of the differences (update: see: The Strange Economics of Information); here is a sampler:

- Information goods usually have high fixed costs of initial production but virtually zero costs of reproduction. Since the marginal cost of an information good is nearly zero, their price also tends to head towards zero, the presence of competition.

- Information goods often have to be experienced in order to be valued. Producers of information often have to give away some version of it for free and investing heavily in building a reputation for quality.

- Near-zero variable costs imply that an information-based products can be reproduced in billions of units with very little additional investment: hence a successful product can scale to massive volumes with surprising speed and efficiency.

- Zero incremental costs causes the unusual pricing patterns often found in information-based products: the same product is sold at different prices in multiple versions with superficial variations. Even free versions sometimes make economic sense!

- Information-based goods have to interconnect with many complementary products in order to work: such interconnections eventually lead to the network effects such as high cost of switching.

- Information-based networks often obey the non-intuitive economics of increasing returns. They are fast changing due to the presence of positive feedback loops: virtuous and vicious cycles with tipping point adoption dynamics.

- Information-based goods usually have decreasing supply costs as their fixed costs are amortized over larger volumes; in addition they benefit from increasing demand for every incremental user, due to the network effects.

- The information age will likely be governed by a series of monopolies, each successive disruptor toppling the older incumbent.

- New kinds of moats exist in the information-based economy.

Information is the new oil

Value investors like to invest in companies with a tangible book value, which often comes from owning “real” things such as fields full of oil or gold. Buffett, to his credit, was one of the first to discover the merits of owning intangible assets like the rights to produce an addictive product like Coca Cola or the rights to a popular brand like Gillette or American Express. Since the essence of information, by its very nature, is intangible, all value investors will soon have to deal with this new type of company, whose intangible assets can appreciate due to network effects rather than depreciate like physical plant and machinery.

The proliferation of sensors and computers and wireless chips embedded in physical things is likely to lead to a vast amount of data. Every time we buy a cup of coffee, search the web, send an email, or go for a run, data is collected and sent to the “cloud”. In fact, every rotation of an airplane turbine, every trip a car makes, every tune played on the radio, generates a burst of raw data. Companies that can extract meaningful patterns from this incoming data will have created a powerful new kind of moat, one that grows with every new bit of information flowing their way. Google’s search engine, for example, gets just a tiny bit more valuable every time we search, and their formidable moat gets incrementally wider, by mining the information buried in that search. No wonder the market valuation of Google has reached that of that industrial age godzilla, Exxon Mobile, within just a decade of it going public (both are worth about 400 billion dollars as I write this). As the incoming CEO of IBM recently remarked, data is the “vast new natural resource for the next century.”

Eventually, the competitive advantages of superior information processing start showing up in the earnings numbers. Munger, who recently celebrated his 90th birthday, was one of the first of the traditional value investors to note:

Google has a huge new moat. In fact, I’ve probably never seen such a wide moat.

— Charlie Munger

Information economy is more than the technology sector

Finally, many companies that seem to be outside of the technology sector may have information processing at the core of their value creation. For example, Express Scripts is a company in the healthcare sector that processes one out of every three prescriptions in the country; every time a patient presents a prescription to the pharmacist, an internet transaction is triggered to request the insurance company to approve the payment for fulfilling the script. This flow of information between the doctor-patient-pharmacy-Express Scripts-insurer is the key to analyzing its value (What are the network effects? Is the feedback loop virtuous or vicious?), as a part of the information economy we have been discussing.

Similarly, many traditional value investors often mistakenly analyze Amazon as a retailer. However, as described in his annual letters to investors, Bezos is deliberately and methodically trying to transform the economics of retail — with its high variable costs — into a software company with high-fixed but low variable costs! In order to fully understand the implications of this, and to evaluate the strength of Amazon’s moat, it must be viewed primarily as an information processor, rather than as a retailer (although it is that as well).

Rediscovering Value

It seems clear, at least to me, that the old value investing strategy of avoiding technology stocks is no longer tenable as software keeps eating more and more of the world. This does not mean, however, that we can afford to throw away the hard-won insights of value investing. It is worth remembering that the Nasdaq crash of 2000 was led by those “dotcom” information economy stocks! It is all too easy to get carried away by “new era” stories spun by today’s version of castles-in-the-air companies like Pets.com, Homegrocer.com, MySpace, and the like.

There is a lot to be learned from the great value investors over the past century. A stock will always be nothing but a piece of a business; its value will derive entirely from that business’s ability to withstand competitive erosion of its profits, i.e. its moat. Valuation always matters, and it helps to have a margin of safety when purchasing a stock. Long term returns are dominated by the ability of the underlying company to compound its earnings over the years. These principles are indeed eternal as well as universal; they apply with equal force in the information economy.

However, the economics of information goods is unusual and non-intuitive enough to merit a re-conceptualization, ab initio, of competitive advantage, moats, and the basics of valuation.

In the end I feel that this creative frisson is exactly what makes it an exciting time to live at the center of the ever-growing intersection of value investing and the information economy. Living on the fault line may be tricky, but the future looks promising – the world is coming our way!

Disclosure: As of the time of writing this post, I am long many of the information economy stocks mentioned in this post, including Amazon, Google, Facebook, Twitter, Express Scripts, Intel, and Microsoft. This post is not meant to be and should not be construed as investment advice of any sort. Investing is extremely difficult, the risk of permanent loss is high, and past results are meaningless in the future.

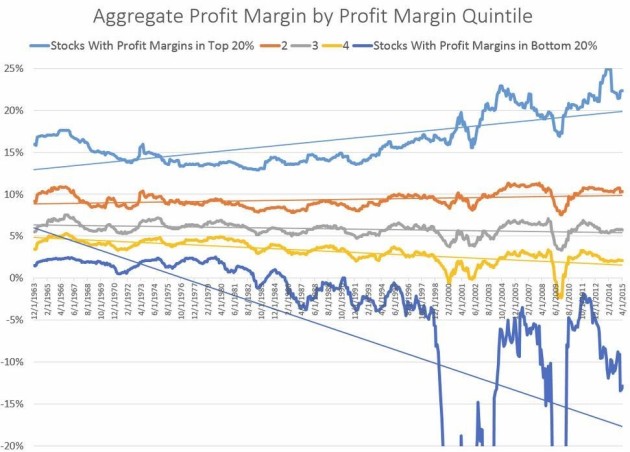

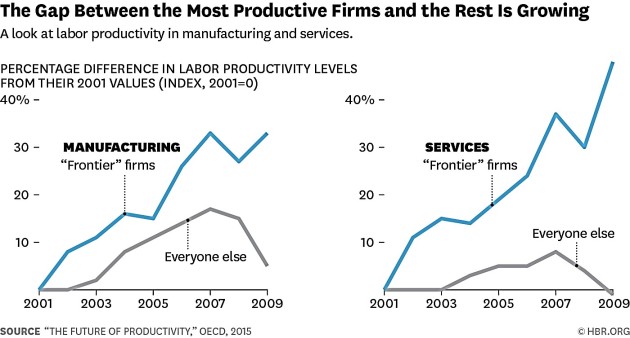

http://investorfieldguide.com/the-rich-are-getting-richer

http://investorfieldguide.com/the-rich-are-getting-richer http://investorfieldguide.com/the-rich-are-getting-richer

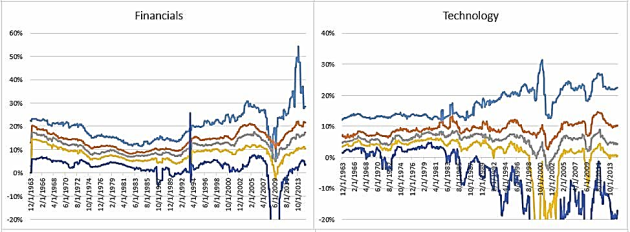

http://investorfieldguide.com/the-rich-are-getting-richer https://hbr.org/2015/08/productivity-is-soaring-at-top-firms-and-sluggish-everywhere-else

https://hbr.org/2015/08/productivity-is-soaring-at-top-firms-and-sluggish-everywhere-else